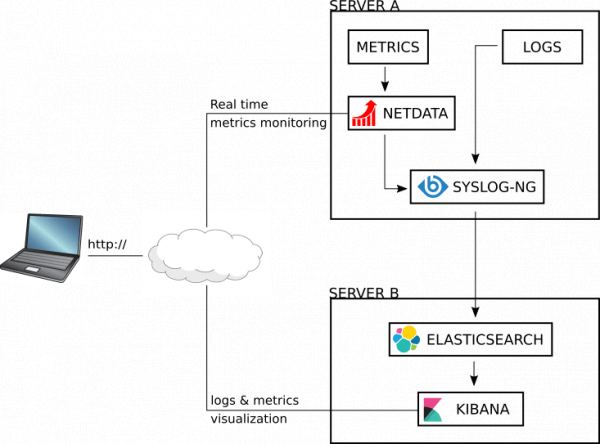

netdata is a system for distributed real-time performance and health monitoring. You can use syslog-ng to collect and filter data provided by netdata and then send it to Elasticsearch for long-term storage and analysis. The aim is to send both metrics and logs to an Elasticsearch instance, and then access it via Kibana. You could also use Grafana for visualization, but that is not covered in this blog post.

This is the fifth blog post in a six-part series on storing logs in Elasticsearch using syslog-ng. You’ll find a link to the next and previous parts in the series at the end of this post. You can also read the whole Elasticsearch series in a single white paper.

I would like to thank here Fabien Wernli and Bertrand Rigaud for his help in writing this HowTo.

Before you begin

This workflow uses two servers. Server A is the application server where metrics and logs are collected and sent to Server B, which hosts the Elasticsearch and Kibana instances. I use CentOS 7 in my examples but steps should be fairly similar on other platforms, even if package management commands and repository names are different.

In the example command lines and configurations, servers will be referred to by the names “servera” and “serverb”. Replace them with their IP addresses or real host names to reflect your environment.

Installation of applications

First we install all necessary applications. Once all components are up and running, we will configure them to work nicely together.

Installation of Server A

Server A runs netdata and syslog-ng. As netdata is quite a new product and develops quickly, it is not yet available in official distribution package repositories. There is a pre-built generic binary available, but installing from source is easy.

- Install a few development-related packages:

yum install autoconf automake curl gcc git libmnl-devel libuuid-devel lm_sensors make MySQL-python nc pkgconfig python python-psycopg2 PyYAML zlib-devel

- Clone the netdata git repository:

git clone https://github.com/firehol/netdata.git --depth=1

- Change to the netdata directory, and start the installation script as root:

cd netdata ./netdata-installer.sh

- When prompted, hit Enter to continue.

The installer script not only compiles netdata but also starts it and configures systemd to start netdata automatically. When installation completes, the installer script also prints information about how to access, stop, or uninstall the application.

By default, the web server of netdata listens on all interfaces on port 19999. You can test it at http://servera:19999/.

For other platforms or up-to-date instructions on future netdata versions, check https://github.com/firehol/netdata/wiki/Installation#1-prepare-your-system.

- Once netdata is up and running, install syslog-ng.

Enable EPEL and add a repository with the latest syslog-ng version, with Elasticsearch support enabled:

yum install https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm cat <<EOF | tee /etc/yum.repos.d/czanik-syslog-ng312-epel-7.repo [czanik-syslog-ng312] name=Copr repo for syslog-ng312 owned by czanik baseurl=https://copr-be.cloud.fedoraproject.org/results/czanik/syslog-ng312/epel-7-x86_64/ type=rpm-md skip_if_unavailable=True gpgcheck=1 gpgkey=https://copr-be.cloud.fedoraproject.org/results/czanik/syslog-ng312/pubkey.gpg repo_gpgcheck=0 enabled=1 enabled_metadata=1 EOF

6. Install syslog-ng and Java modules:

# yum install syslog-ng syslog-ng-java

7. Make sure that libjvm.so is available to syslog-ng (for additional information, check: https://www.syslog-ng.com/community/b/blog/posts/troubleshooting-java-support-syslog-ng):

echo /usr/lib/jvm/jre/lib/amd64/server > /etc/ld.so.conf.d/java.conf ldconfig

8. Disable rsyslog, then enable and start syslog-ng:

systemctl stop rsyslog systemctl enable syslog-ng systemctl start syslog-ng

9. Once you are ready, you can check whether syslog-ng is up and running by sending it a log message and reading it back:

logger bla tail -1 /var/log/messages

You should see a similar line on screen:

Nov 14 06:07:24 localhost.localdomain root[39494]: bla

Installation of Server B

Server B runs Elasticsearch and Kibana.

- Install JRE:

yum install java-1.8.0-openjdk.x86_64

- Add the Elasticsearch repository:

cat <<EOF | tee /etc/yum.repos.d/elasticsearch.repo [elasticsearch-5.x] name=Elasticsearch repository for 5.x packages baseurl=https://artifacts.elastic.co/packages/5.x/yum gpgcheck=1 gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch enabled=1 autorefresh=1 type=rpm-md EOF

- Install, enable, and start Elasticsearch:

yum install elasticsearch systemctl enable elasticsearch.service systemctl start elasticsearch.service

- Install, enable, and start Kibana:

yum install kibana systemctl enable kibana.service systemctl start kibana.service

Configuring applications

Once we installed all applications, we can start configuring them.

Configuring Server A

- First, configure syslog-ng. Replace its original configuration in /etc/syslog-ng/syslog-ng.conf with the following configuration:

@version:3.12

@include "scl.conf"

source s_system {

system();

internal();

};

source s_netdata {

network(

transport(tcp)

port(1234)

flags(no-parse)

tags(netdata)

);

};

parser p_netdata {

json-parser(

prefix("netdata.")

);

};

filter f_netdata {

match("users", value("netdata.chart_type"));

};

destination d_elastic {

elasticsearch2 (

cluster("elasticsearch")

client_mode("http")

index("syslog-${YEAR}.${MONTH}")

time-zone(UTC)

type("syslog")

flush-limit(1)

server("serverB")

template("$(format-json --scope rfc5424 --scope nv-pairs --exclude DATE --key ISODATE)")

persist-name(elasticsearch-syslog)

)

};

destination d_elastic_netdata {

elasticsearch2 (

cluster("syslog-ng")

client_mode("http")

index("netdata-${YEAR}.${MONTH}.${DAY}")

time-zone(UTC)

type("netdata")

flush-limit(512)

server("serverB")

template("${MSG}")

persist-name(elasticsearch-netdata)

)

};

log {

source(s_netdata);

parser(p_netdata);

filter(f_netdata);

destination(d_elastic_netdata);

};

log {

source(s_system);

destination(d_elastic);

};

This configuration sends netdata metrics and also all syslog messages to Elasticsearch directly.

- If you want to collect some of the logs locally as well, keep some of the original configuration accordingly, or write your own rules.

- You need to change the server name from ServerB to something matching your environment. The f_netdata filter shows one possible way of filtering netdata metrics before storing to Elasticsearch. Adopt it to your environment.

- Next, configure netdata. Open your configuration (/etc/netdata/netdata.conf), and replace the [backend] statement with the following snippet:

[backend]

enabled = yes

type = json

destination = localhost:1234

data source = average

prefix = netdata

update every = 10

buffer on failures = 10

timeout ms = 20000

send charts matching = *

The “send charts matching” setting here serves a similar role as “f_netdata” in the syslog-ng configuration. You can use either of them, but syslog-ng provides more flexibility.

- Finally, restart both netdata and syslog-ng so the configurations take effect. Note that if you used the above configuration, you do not see logs arriving in local files anymore. You can check your logs once the Elasticsearch server part is configured.

Configuring Server B

Elasticsearch controls how data is stored and indexed using index templates. The following two templates will ensure netdata and syslog data have the correct settings.

- First, copy and save them to files on Server B:

- netdata-template.json:

{

"order" : 0,

"template" : "netdata-*",

"settings" : {

"index" : {

"query": {

"default_field": "_all"

},

"number_of_shards" : "1",

"number_of_replicas" : "0"

}

},

"mappings" : {

"netdata" : {

"_source" : {

"enabled" : true

},

"dynamic_templates": [

{

"string_fields": {

"mapping": {

"type": "keyword",

"doc_values": true

},

"match_mapping_type": "string",

"match": "*"

}

}

],

"properties" : {

"timestamp" : {

"format" : "epoch_second",

"type" : "date"

},

"value" : {

"index" : true,

"type" : "double",

"doc_values" : true

}

}

}

},

"aliases" : { }

}

- syslog-template.json:

{

"order": 0,

"template": "syslog-*",

"settings": {

"index": {

"query": {

"default_field": "MESSAGE"

},

"number_of_shards": "1",

"number_of_replicas": "0"

}

},

"mappings": {

"syslog": {

"_source": {

"enabled": true

},

"dynamic_templates": [

{

"string_fields": {

"mapping": {

"type": "keyword",

"doc_values": true

},

"match_mapping_type": "string",

"match": "*"

}

}

],

"properties": {

"MESSAGE": {

"type": "text",

"index": "true"

}

}

}

},

"aliases": {}

}

2. Once you saved them, you can use the REST API to push them to Elasticsearch:

curl -XPUT 0:9200/_template/netdata -d@netdata-template.json curl -XPUT 0:9200/_template/syslog -d@syslog-template.json

3. You can now edit your Elasticsearch configuration file and enable binding to an external interface so it can receive data from syslog-ng. Open /etc/elasticsearch/elasticsearch.yml and set the network.host parameter:

network.host: - [serverB_IP] - 127.0.0.1

Of course, replace [serverB_IP] with the actual IP address.

4. Restart Elasticsearch so the configuration takes effect.

5. Finally, edit your Kibana configuration (/etc/kibana/kibana.yml), and append the following few lines to the file:

server.port: 5601 server.host: "[serverB_IP]" server.name: "A_GREAT_TITLE_FOR_MY_LOGS" elasticsearch.url: "http://127.0.0.1:9200"

As usual, replace [serverB_IP] with the actual IP address.

6. Restart Kibana so the configuration takes effect.

Testing

You should now be able to log in to Kibana on port 5601 of Server B. You should set up your indexes on first use, and then you are ready to query your logs. If it does not work, here is a list of possible problems:

- “serverb” has not been rewritten to the proper IP address in configurations.

- SELinux is running (for testing, “setenforce 0” is enough, but for production, make sure that SELinux is properly configured).

- The firewall is blocking network traffic.

Further reading

I gave here only minimal instructions to get started with netdata, syslog-ng, and Elasticsearch. You can learn more on their respective documentation pages:

- netdata: https://github.com/firehol/netdata/wiki

- syslog-ng: https://www.syslog-ng.com/technical-documents/doc/syslog-ng-open-source-edition/3.16/administration-guide

- Elasticsearch: https://www.elastic.co/guide/index.html

In my next Elasticsearch blog, I talk about how to secure your Elasticsearch cluster and avoid ransomware.

In my previous blogs in the Elasticsearch series, I covered:

- Basic information about using Elasticsearch with syslog-ng and how syslog-ng can simplify your logging architecture

- Logging to Elasticsearch made simple with syslog-ng

- How to parse data with syslog-ng, store in Elasticsearch, and analyze with Kibana

- How to get started on RHEL/CentOS using syslog-ng in combination with Elasticsearch version 5 and Elasticsearch version 6

Read the entire series about storing logs in Elasticsearch using syslog-ng in a single white paper.